It’s Q4, and compensation review season is in full swing. Your inbox is overflowing with manager requests, your spreadsheets have sprouted seventeen new tabs, and you're staring down a list of roles that need salary benchmarks by Friday.

Then someone on your team has a bright idea: "Why don't we just ask ChatGPT?"

And honestly? We get it. ChatGPT is fast, free, and available at 2 am when you're still wrestling with that market data request from Finance. On top of everything, it sounds authoritative AND gives you numbers. When AI seems to be doing everything from writing code to diagnosing medical conditions, why wouldn't it know what a Senior Product Manager in Munich should earn?

However, compensation decisions affect real people's mortgages, career moves, and sense of being valued. They also affect your company's budget, retention rates, and (increasingly) legal compliance. When actual money is on the line, "sounds about right" isn't really good enough.

🔍 So we tested it. The result? ChatGPT's salary predictions are off by 23.3% on average, with errors reaching 61% for executive roles.

Together with Ellipsis, we ran 10,000 salary prompts across 200 roles in Paris, London, and Munich, then compared the AI's predictions against Figures' database of actual European salary data from client companies. What follows is exactly where ChatGPT gets it wrong, why it happens, and what it means for your next compensation review.

The ChatGPT salary benchmark experiment: what we did

We didn't want to cherry-pick a few bad examples and call it a day. If we were going to test whether ChatGPT could handle salary benchmarking, we needed to do it properly.

The setup:

- 200 roles across job families, including Software Engineering, Sales, Marketing, Finance, People Operations, and more.

- 3 locations: Paris, London, and Munich.

- 50 prompt variations per role, giving us 10,000 total predictions.

- Comparison against Figures' real market data from actual European companies.

We deliberately varied how we asked. Some prompts were direct ("What's the median salary for a Senior Data Engineer in Paris?"). Others took a hiring manager's perspective, a job seeker's angle, or a formal HR consultant tone. We asked conversationally. We asked with detailed context. We asked with almost no context at all.

Why bother with 50 variations? Because we knew there would be some smart alec who would say: "You just asked wrong."

🤔 Interestingly, prompt style made almost no difference. Whether we asked as a recruiter, a job seeker, or a curious researcher, the error rates varied by just 2.3% across all ten prompt categories. That means the AI's knowledge is the issue – not how you phrase the question.

The results: how accurate is ChatGPT, really?

Let's cut to the numbers.

📊 A note on our comparison data: These predictions were measured against Figures' European salary database, built from verified HRIS data provided directly by our client companies. While this represents one of the most reliable real-world datasets available for European compensation, it reflects participating companies rather than the entire market. We selected only our most robust data points for this comparison to ensure the fairest possible test.

At first glance, a 17% average error might not sound catastrophic. But let's put that in context.

On a €60,000 salary, a 17% error means you're off by €10,200. This number is the difference between an offer a candidate accepts and one they reject, and between an employee who feels fairly paid and one updating their LinkedIn profile.

Scale that across a team of 50 people, and you're looking at potentially €500,000 in misallocated compensation budget. But even at the individual level, getting one salary wrong by €10,000 can cost you a hire or trigger an exit.

The directional bias is even more revealing:

- 60% of predictions were too high.

- Only 37% were too low.

- On average, GPT-5.1 overestimates European salaries by around 9%.

🚨 That means the AI has a systematic tendency to inflate what Europeans actually earn. We'll dig into why shortly.

"A 17% error rate might sound acceptable until you realise what it means in practice. You're either overpaying and burning through budget, or underpaying and losing candidates to competitors who actually did the homework."

– Virgile Raingeard, CEO at Figures

The median error (12.5% for base salary) is a little lower than the average – but medians often hide the extremes. And in compensation, it's often the extremes that matter most.

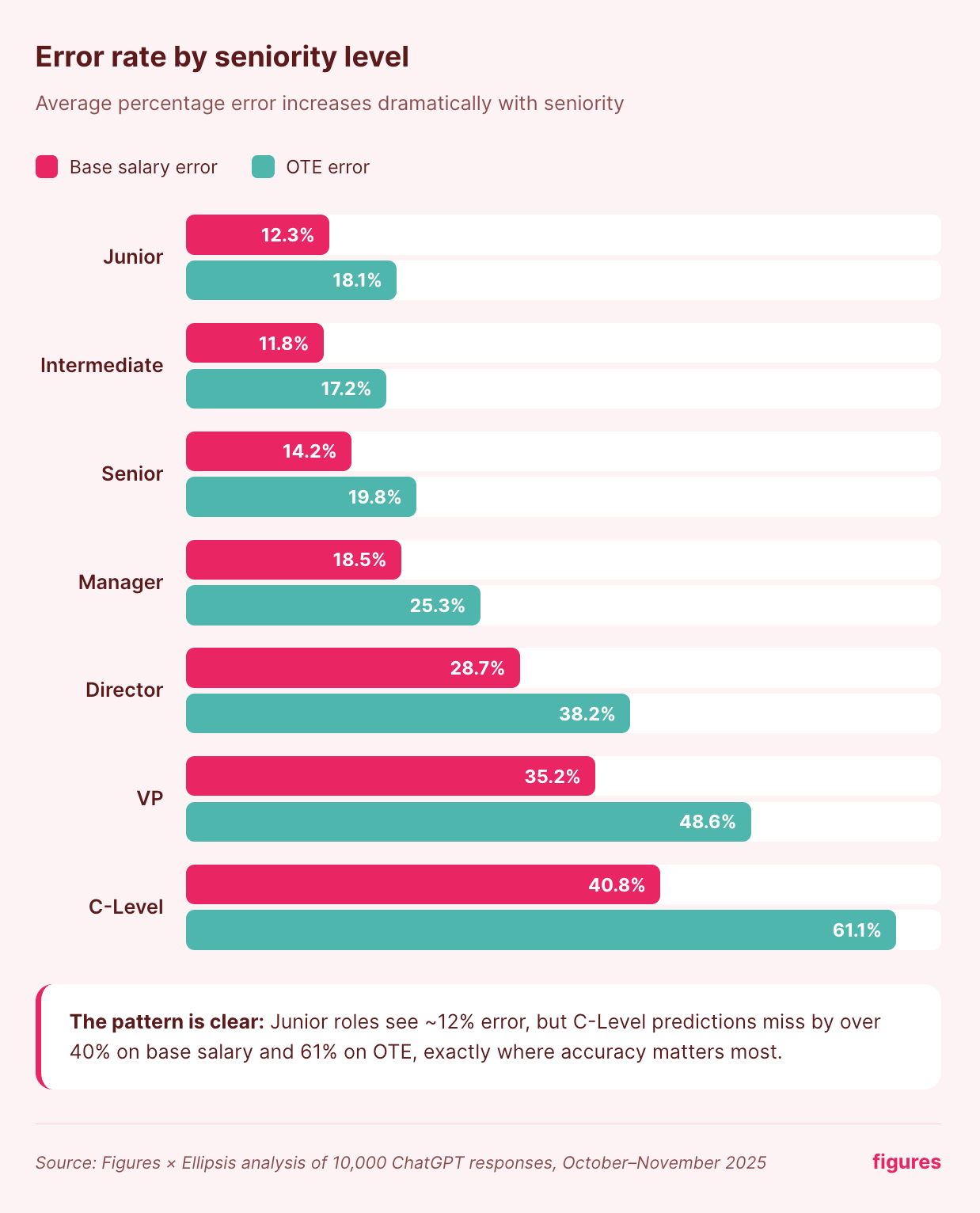

Where it really falls apart: the seniority problem

We haven’t even gotten to the worst part yet. ChatGPT actually gets worse as the stakes get higher.

For junior and intermediate roles, the AI performs reasonably well. These are the positions with standardised salaries, transparent job postings, and plenty of publicly available data for the model to learn from.

But executive compensation? That's where things go off the rails.

The C-Level disaster in numbers:

- 40.8% average error on base salary.

- 61.1% average error on total compensation.

- Chief Marketing Officer in Paris: actual salary €110,000, ChatGPT predicted €178,800 – a 62.5% miss.

- Chief Operating Officer in Paris: actual €122,400, predicted €188,400 – off by 54%.

‼️ The higher the salary, the worse the AI performs.

That’s because executive compensation is rarely ever published. The structures are complex (equity, deferred bonuses, performance multipliers). And the training data skews heavily toward US Big Tech packages that bear little resemblance to what’s going on in Europe.

So, ChatGPT is most reliable for roles where you probably need the least help, and least reliable where accurate data matters most. Frustrating to say the least.

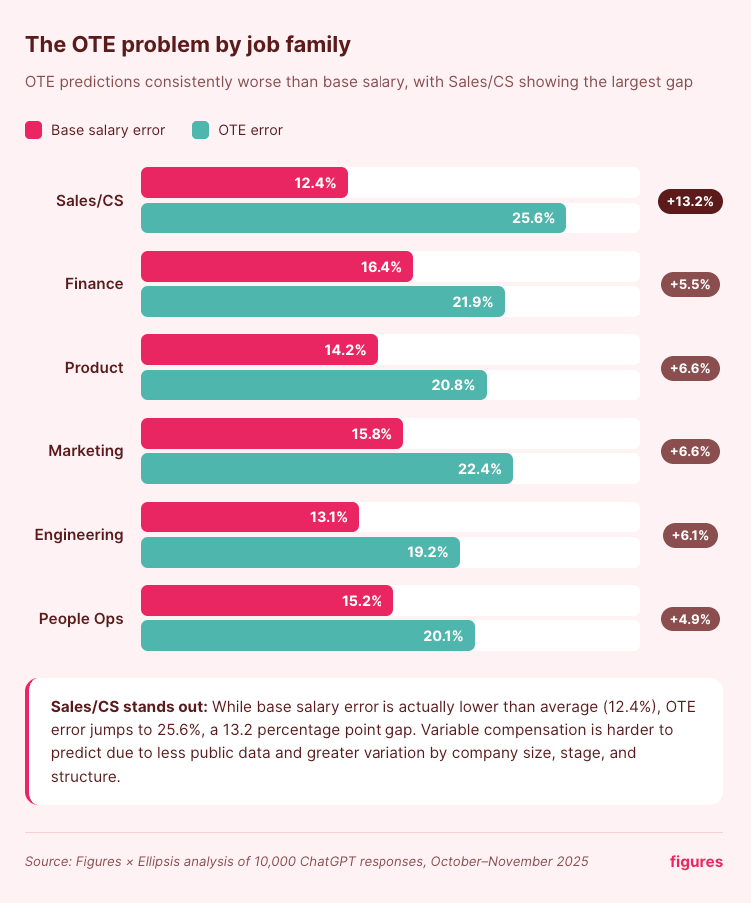

The OTE problem: ChatGPT doesn't understand European variable pay

You might have noticed a pattern in those tables: OTE (On-Target Earnings) predictions are consistently worse than base salary. Across every seniority level, every location, every job family – the AI struggles more with total compensation than with fixed pay.

The gap is about 6-7 percentage points off of actual figures, every single time.

Sales and Customer Success show the most dramatic split. Base salary predictions are actually decent (12.4% error), but OTE balloons to 25.6%.

Why does this happen?

Variable compensation is inherently harder to predict. Bonus structures vary enormously by company size, industry, funding stage, and individual negotiation. There's far less public data available on actual bonus payouts compared to base salaries, which appear in job postings and salary surveys.

When we examined ChatGPT's reasoning on high-error OTE predictions, we found the model making confident assumptions about bonus percentages and commission structures – but these assumptions weren't grounded in any specific market data. The AI fills gaps in its knowledge with generalisations, and those generalisations compound when you're calculating total compensation.

For roles where variable pay makes up a significant portion of the package, getting OTE wrong means getting the whole offer wrong.

📊 The takeaway: If you're benchmarking roles with meaningful bonus or commission components, treat ChatGPT's OTE figures with extra caution. The base salary estimate might get you in the right postcode, but the total compensation prediction could be in a different city entirely.

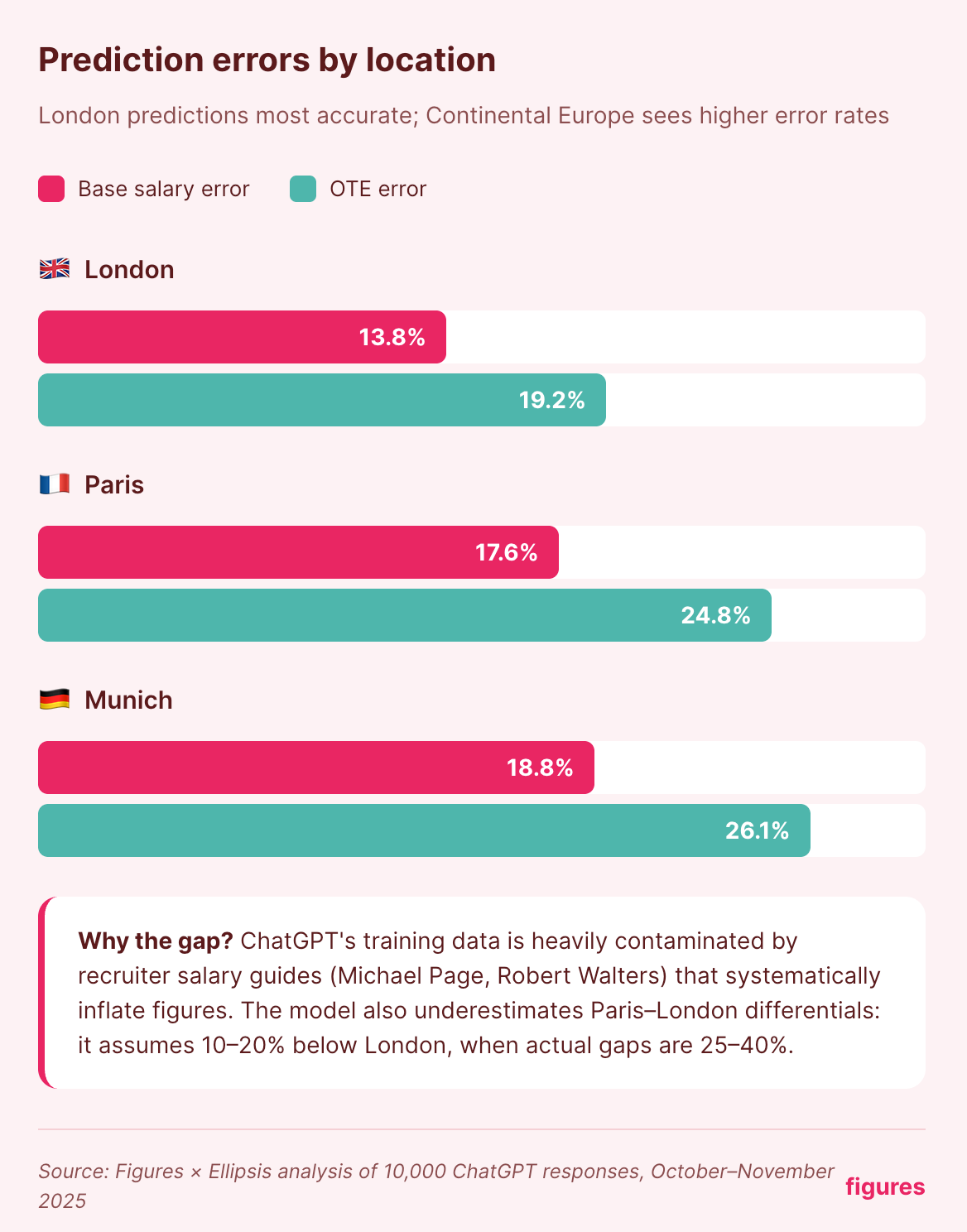

The training data problem: why ChatGPT gets Europe wrong

So what's actually going on here? Why does ChatGPT consistently overshoot European salaries?

Well, that’s because the internet is overwhelmingly American. Shouldn’t be surprised, really. Most content is optimised for the American market, and unfortunately, that will have a considerable impact on the sources that ChatGPT learns from.

And when we analysed the reasoning behind 61 high-error predictions (those missing by more than 20%), our findings cemented this fact:

1. Big Tech anchoring

Even when ChatGPT acknowledges that FAANG salaries are outliers, it still uses them as reference points. In one prediction for a Staff Product Manager in Paris (42.7% error), the model wrote:

"For very large US tech companies paying near-US bands, total comp can exceed these ranges, but those are outliers in the Paris market."

It knew these were outliers. It still landed ~43% too high. Even when explicitly calling Big Tech salaries "outliers," the predictions still land way above actual market data. It's like knowing you shouldn't eat the entire cake while your hand is already reaching for the third slice.

2. Scale-up bias

The default assumption across predictions was "growth-stage or mid-sized company, roughly 100-1,000 employees" in well-funded tech. Great if that's your company. Not so helpful if it isn't.

3. Getting European geography wrong

ChatGPT stated that Paris salaries run "10-20% below London." Nonsense. More like 25-40% below for comparable roles. That miscalibration alone explains a significant chunk of the Paris errors.

4. Recruiter salary guide contamination

Multiple predictions cited Michael Page, Robert Walters, and Hays salary guides as sources. That’s good news… right?

Not exactly. Recruiter guides are known to inflate figures for competitive positioning. One Senior Financial Controller prediction landed at €94,000 based on these guides, even though actual market data put that figure at €62,000. A 51% miss.

London predictions were most accurate – unsurprisingly, given that more English-language salary data exists for the UK market. But even there, a 14% base error and 20% OTE error are hardly reliable.

For continental Europe? The model is essentially guessing with an American accent.

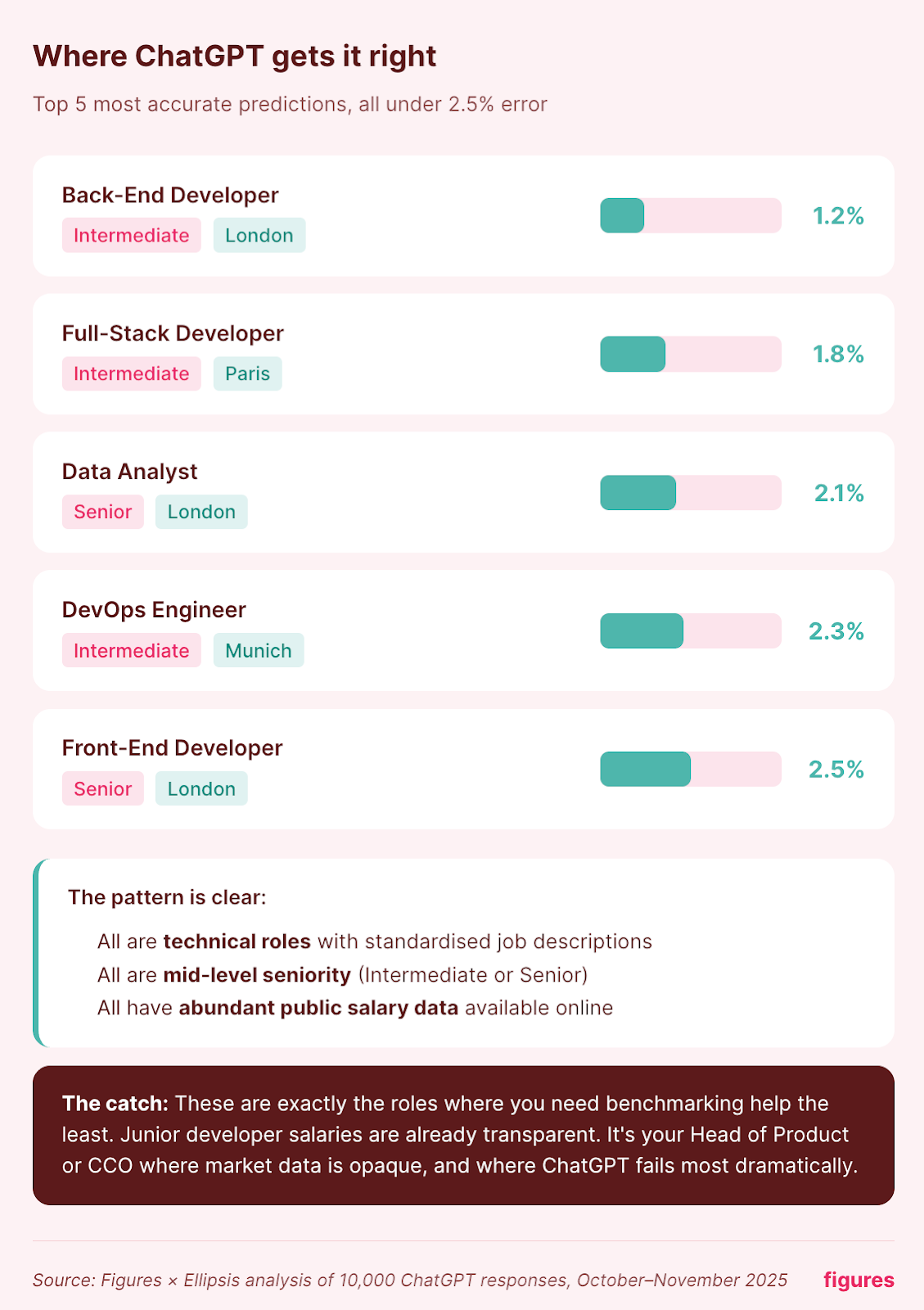

The few wins: where ChatGPT actually gets it right

In the spirit of fairness, let's acknowledge where ChatGPT was most accurate.

The keen-eyed have likely spotted the pattern already.

These are all mid-level roles in well-documented fields. Accounting has standardised qualifications and relatively transparent pay scales. Technical roles like developers and data engineers are discussed endlessly online – on forums, in job postings, across salary-sharing platforms.

With that in mind, we can actually see what makes these estimates tick:

- Standardised roles with clear job definitions.

- Intermediate seniority, where pay bands are narrower.

- Technical fields with abundant public salary data.

- Less variable compensation to complicate the picture.

Sounds good, but remember: These are all estimates that you could’ve easily found yourself. The junior developer market is already reasonably transparent. Stack Overflow surveys, Glassdoor, LinkedIn… There's no shortage of directional data.

It's the Head of Product role, the Chief Commercial Officer, and the VP of People in a specific European market where you genuinely need accurate benchmarks. And that's precisely where ChatGPT falls short.

So, if you need information about higher-end roles, ChatGPT is about as useful as a chocolate teapot. Or a glass hammer, you can take your pick.

What this means for compensation review season

Timing matters here.

October to March is peak compensation review season across Europe. It's when HR teams finalise salary bands, calculate bonus pools, make promotion decisions, and set budgets for the year ahead. It's also when the temptation to take shortcuts is highest – because everyone is stretched thin.

The risks of AI-generated benchmarks during this period fall into three buckets:

1. Overpaying based on inflated estimates

If ChatGPT's 9% average overestimation creeps into your salary bands, you're burning budget that could go elsewhere. Multiply that across departments, and you've created comp structures that aren't sustainable. That all leads to painful corrections down the line.

2. Underpaying and losing talent

The flip side hurts differently. When the AI underestimates (and it does for 37% of predictions), you risk making offers that candidates reject, or setting internal salaries that push your best people toward the door.

3. Inconsistency you can't explain

Different team members querying ChatGPT get different answers. There's no audit trail, no methodology to point to, no way to justify decisions when an employee asks, "How did you arrive at this number?"

That last point becomes especially uncomfortable with the EU Pay Transparency Directive arriving in June 2026. Companies will need to explain and defend their compensation decisions. Regulatory bodies won't accept "the AI told us" as a methodology.

🤔 Under the EU Pay Transparency Directive, companies with pay gaps exceeding 5% between genders for equivalent roles will need to conduct joint pay assessments. Inaccurate benchmarking today creates compliance headaches tomorrow.

What actually works: real benchmarking data

So if ChatGPT isn't the answer, what is?

The alternative isn't complicated; it's just less flashy than asking an AI chatbot. Real compensation benchmarking relies on actual salary data from actual companies, updated regularly, and specific to your market.

When evaluating any benchmarking source, ask these questions:

- How fresh is the data? Annual surveys are outdated by the time they're published. Monthly updates reflect what's actually happening in the market.

- Where does it come from? Real HRIS data from employers beats self-reported figures or recruiter estimates every time.

- Is it geographically specific? European roles need European data. A global average that's 60% weighted toward the US market won't help you hire in Lyon.

- Can you defend it? When a candidate pushes back on an offer, or a regulator asks how you set your bands, you need a methodology that holds up to scrutiny.

Figures was built specifically for this problem. The platform draws on 3.5 million data points powered by Mercer, updated monthly through direct HRIS integrations with over 30 systems.

🎊 That means you're comparing against what European companies actually pay their people, not what an AI thinks they might pay based on job postings and American tech blogs.

What this looks like in practice: Treatwell's story

Whether you're using ChatGPT or manual methods, the core problem is the same: unreliable data. Before finding Figures, beauty tech company Treatwell faced exactly the kind of data challenge this study highlights, but multiplied across 13 European markets.

Their HR team manually collected salary data for over 750 roles, trawling job adverts, Glassdoor reviews, and candidate expectations. Then they entered everything into spreadsheets. It’s somewhere we’ve likely all been before.

"We would be trying to get an indication for our salaries, but it was super difficult," explains Talya Avram, Director of Talent Acquisition. "In some countries, the salary appears on ads, but it isn't really a common thing."

This led to hours of manual labour producing data that were still inaccurate or unreliable. And with plans to recruit 600 more people across those 13 markets, they needed a better approach.

After switching to Figures, Treatwell's leaders are now empowered to make informed budgeting decisions based on accurate, up-to-date salary data across every market they operate in. No more guessing, thankfully.

And the thing is, using proper benchmarking tools often takes less time than querying ChatGPT repeatedly, second-guessing the answers, and manually adjusting for the errors you suspect are there.

What you really need is one dashboard with one source of truth. And Figures delivers that.

The verdict: ChatGPT is great – just not for salary benchmarks

Let’s lovebomb ChatGPT a bit. Because it genuinely isn’t a bad tool. It can draft emails, summarise documents, brainstorm ideas, and explain complex concepts in seconds.

But salary benchmarking? That's not its strength.

The numbers speak for themselves:

- 16.8% average error on base salary.

- 23.3% average error on total compensation.

- Up to 62% error on executive roles.

- A systematic bias that overestimates European salaries by nearly 10%.

The AI performs best on junior and mid-level technical roles – positions where public salary data is already abundant, and you probably don't need much help. For the roles that particularly require accurate benchmarking – senior leadership, sales compensation, niche functions – the error rates climb to levels that could seriously damage your budget, your offers, and your retention.

And here's the thing: the model doesn't know what it doesn't know. It delivers every answer with the same confident tone, whether it's 1% off or 60% off. Confidence doesn’t equal being correct.

When you're making decisions that affect people's livelihoods and your company's financial health, you need more than confident guesses.

You need data you can trust, explain, and defend. Ready to see what that looks like?

Book a free demo with Figures and discover how real European salary data can transform your compensation decisions – no guesswork required.